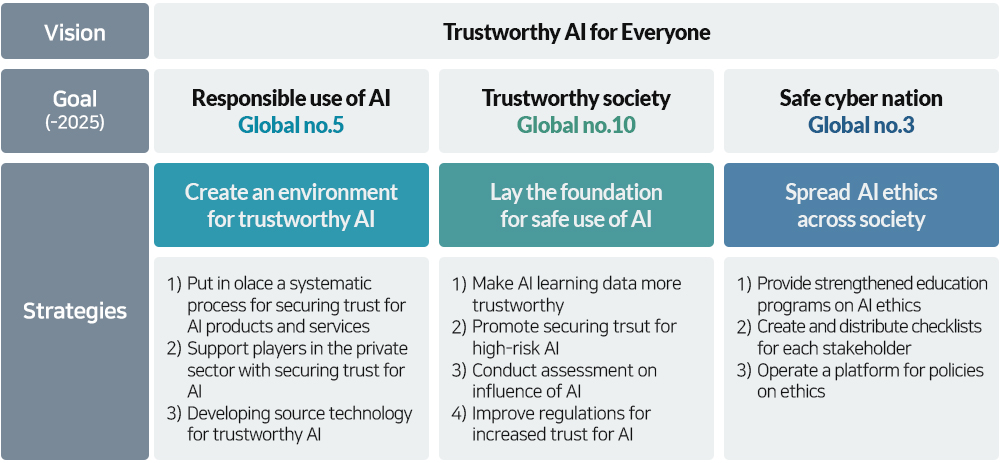

- Presented 3 strategies and 10 action plans to "realize trustworthy AI for everyone"

- To be implemented continuously and step by step until 2025

The Ministry of Science and Technology ("MSIT", Minister: Choi Kiyoung) announced the human-centered 「Strategy to realize artificial intelligence trustworthy for everyone」 at the 22nd general meeting of the Presidential Committee on the Fourth Industrial Revolution on May 13th.

While artificial intelligence is leading innovation after being used and spread across all industries and society as a leading force in the fourth industry revolution, it is showing unexpected social issues* and concerns following its widespread use.

* Artificial intelligence chatbot ‘Iruda’ (2021 January), former President Obama Deep Fake(2018 July), Psychopath AI developed by MIT (2018 June), etc.

That has caused major countries to recognize that gaining social trust for artificial intelligence is the first step to using it for industries and society. As a result, active policy actions have been taken to realize artificial intelligence that can be trusted.

Current status policy measures taken by major countries to secure trust for AI |

EU |

▸Suggested 「AI bill」 to propose putting focused regulations to high-risk AI (imposing obligations to providers) (2021 Apr)

▸Established a system where providers are obliged to notify users of the use of ‘automated decision making’ and users may refuse and have rights to refuse it, ask for an explanation and raise objections ((「General Data Protection. Regulation」, (2018.~)

▸Provided trust self-checklist for the private sector. Presented three components of trustworthy AI. (2019) |

US |

▸Adopted ‘technically safe AI development’ in National AI R&D Strategic Plan (2019)

▸Preparing AI development principles mostly for major businesses (IBM, MS, Google) promoted autonomous regulation to realize ethical use of AI

▸Announced a federal-government-level regulation guideline, with main goals to limit regulator overreach and focus on an risk-based approach. The guideline includes 10 principles to secure trust in AI (Transparency, fairness) (2020) |

France |

▸Concluded recommendations needed to realize AI for humans from an open discussion with 3,000 people from civil society and business (2018) |

UK |

▸Established 5 codes of ethics (2018 Apr), a guide to using AI in the public sector (2019 June.), a guideline for explainable AI (2020 May) |

Japan |

▸Announced social principles of 「human-centered AI」, introducing 7 basic rules for all stakeholders in AI (2018.March) |

Amid such global trends, Korea’s strategy was prepared based on the understanding that policy support should be promptly made for realizing an artificial intelligence that can be trusted for Korea to become a leader in AI, putting people at the center.

The strategy has the vision of “realize trustworthy artificial intelligence for everyone” and will be implemented step by step until 2025, based on the three pillars of ‘technology, system, ethics’ and 10 action plans.

Vision, goals, detailed strategies of trustworthy artificial intelligence |

|

1. Create an environment to realize a reliable artificial intelligence

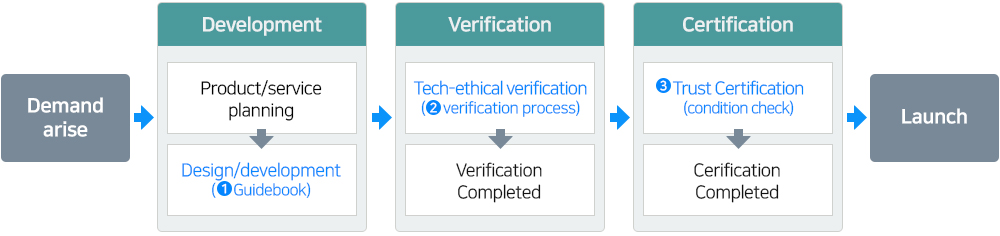

[1] Put in place a systematic process for securing trust for artificial intelligence products and services at each stage of development.

Following the process of launching artificial intelligence products and services in the private sector, present the standard of securing trust and methods that companies, developers, and third parties can refer to.

Support process flow for AI products and service |

|

[2] Support players in the private sector with securing trust for AI

Operate a platform that provides integrated support of ‘Data Acquisition → Algorithm Learning → Validation’ for startups that may not be technically or financially * to have access to the gradual process of realizing trustable AI.

[3] Develop source technology for trustworthy AI

Promote the development of source technologies to boost its “explainable”, fairness, robustness to either allow AI already in systems to explain the criteria standards or make AI find and effectively remove its biases on laws, systems, and ethics.

2. Lay the foundation for safe use of artificial intelligence

[1] Make AI learning data more trustworthy.

Working with the private sector, create and distribute systematic standard technologies* such as trust assurance index that both the public and private sector should follow when making AI-based learning data.

* Require segmented and detailed conditions for trust, depending on the purpose of learning data and present validation and measuring methods.

For the Data Dam initiative, part of the Digital New Deal, plans to increase the overall quality by applying ideas to secure trust such as abiding by laws and regulations on copyrights and personal information protection throughout the whole process.

[2] Promote securing trust for high-risk artificial intelligence

Set the criteria for high-risk artificial intelligence which may pose a potential threat to the citizens’ safety or basic rights and make service providers ‘notify’ users of whether the risky AI will be used.

When it comes to making regulations regarding ‘decline to use’ or ‘explain the consequences’ and ‘raise an issue’ about the risky AI, which the users may do after being notified of the use of risky AI, a mid-to-long-term review will take place, considering the global trends in laws and regulation, industrial influence, whether they can be agreed or accepted in society, technological feasibility.

[3] Conduct assessment on influence of AI

In order to analyze AI’s influence on citizens’ life comprehensively and systematically and respond to it, Social Impact Assessments of Intelligent Information Services will be introduced, as stipulated in 「FRAMEWORK ACT ON INTELLIGENT INFORMATIZATION」

* The State and local governments may survey and assess the following matters in respect of how the utilization and spread of intelligent information services, etc. that have far-reach effects on citizens’ lives affect the society, economy, culture and citizens’ daily lives

[4] Improve regulations for increased trust for AI

Among the items found through the introduction of 「roadmap for artificial intelligence laws, systems, and regulations」 (2012.Dec.), systems related to gaining trust for AI and protecting users ‘life and physical health will be improved as follows:

① creating an environment where the industry autonomously engages in the management and monitoring of AI; ② securing fairness and transparency in platform algorithms; ③ preparing a standards for algorithm opening to keep business secrets; ④ preparing standards for high-risk technology

3. Spread AI ethics across society

[1] Provide strengthened education programs on AI ethics

Prepare a comprehensive education curriculum that includes AI’s influence on society, societal and humanitarian perspectives such as human-AI interactions. Based on this curriculum, design and implement customized ethic education programs for researchers, developers, citizens.

[2] Create and distribute checklists for each stakeholder group

Design and distribute a detailed action checklist for researchers that refer to when they desire to check whether they are following ethic standards in their daily life or work activities.

[3] Operate a platform for ethic policies

Create and operate a venue for open discussions to various society members from academia, business, civic organizations, the public, can come together and share their ideas on ethics on AI use and discuss ways for improvement.

For further information, please contact Deputy Director Kim Hyun(E-mail : kh0522@korea.kr) and Park Yae Seul(E-mail : bom1379@korea.kr) of the MInistry of Science and ICT.